Kubecon and CloudNativeCon 2024 Europe summary

This blog post shares my thoughts on attending Kubecon and CloudNativeCon 2024 Europe in Paris. It was my third time at this conference, and it felt bigger than last year’s in Amsterdam. Apparently it had an impact on public transport. I missed part of the opening keynote because of the extremely busy rush hour tram in Paris.

On Artificial Intelligence, Machine Learning and GPUs

Talks about AI, ML, and GPUs were everywhere this year. While it wasn’t my main interest, I did learn about GPU resource sharing and power usage on Kubernetes. There were also ideas about offering Models-as-a-Service, which could be cool for Wikimedia Toolforge in the future.

See also:

On security, policy and authentication

This was probably the main interest for me in the event, given Wikimedia Toolforge was about to migrate away from Pod Security Policy, and we were currently evaluating different alternatives.

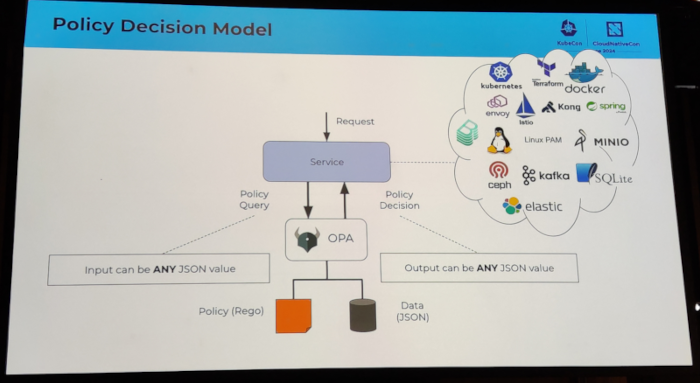

In contrast to my previous attendances to Kubecon, where there were three policy agents with presence in the program schedule, Kyverno, Kubewarden and OpenPolicyAgent (OPA), this time only OPA had the most relevant sessions.

One surprising bit I got from one of the OPA sessions was that it could work to authorize linux PAM sessions. Could this be useful for Wikimedia Toolforge?

I attended several sessions related to authentication topics. I discovered the keycloak software, which looks very promising. I also attended an Oauth2 session which I had a hard time following, because I clearly missed some additional knowledge about how Oauth2 works internally.

I also attended a couple of sessions that ended up being a vendor sales talk.

See also:

On container image builds, harbor registry, etc

This topic was also of interest to me because, again, it is a core part of Wikimedia Toolforge.

I attended a couple of sessions regarding container image builds, including topics like general best practices, image minimization, and buildpacks. I learned about kpack, which at first sight felt like a nice simplification of how the Toolforge build service was implemented.

I also attended a session by the Harbor project maintainers where they shared some valuable information on things happening soon or in the future , for example:

- new harbor command line interface coming soon. Only the first iteration though.

- harbor operator, to install and manage harbor. Looking for new maintainers, otherwise going to be archived.

- the project is now experimenting with adding support to hosting more artifacts: maven, NPM, pypi. I wonder if they will consider hosting Debian .deb packages.

On networking

I attended a couple of sessions regarding networking.

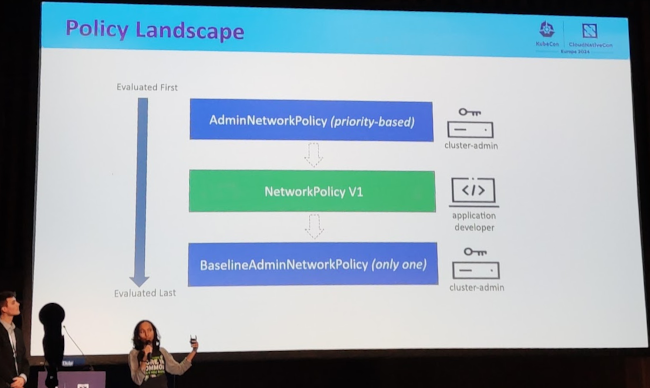

One session in particular I paid special attention to, ragarding on network policies. They discussed new semantics being added to the Kubernetes API.

The different layers of abstractions being added to the API, the different hook points, and override layers clearly resembled (to me at least) the network packet filtering stack of the linux kernel (netfilter), but without the 20 (plus) years of experience building the right semantics and user interfaces.

I very recently missed some semantics for limiting the number of open connections per namespace, see Phabricator T356164: [toolforge] several tools get periods of connection refused (104) when connecting to wikis This functionality should be available in the lower level tools, I mean Netfilter. I may submit a proposal upstream at some point, so they consider adding this to the Kubernetes API.

Final notes

In general, I believe I learned many things, and perhaps even more importantly I re-learned some stuff I had forgotten because of lack of daily exposure. I’m really happy that the cloud native way of thinking was reinforced in me, which I still need because most of my muscle memory to approach systems architecture and engineering is from the old pre-cloud days. That being said, I felt less engaged with the content of the conference schedule compared to last year. I don’t know if the schedule itself was less interesting, or that I’m losing interest?

Finally, not an official track in the conference, but we met a bunch of folks from Wikimedia Deutschland. We had a really nice time talking about how wikibase.cloud uses Kubernetes, whether they could run in Wikimedia Cloud Services, and why structured data is so nice.